Ultra Low Power DSP for Health Care Monitoring

Wireless medical technologies have created opportunities for new methods of preventive care using biomedical implanted and body-worn devices. The design of the technologies that will enable these applications requires correct delivery of the vital physiological signs of the patient along with the energy management in power-constrained devices. The high cost and even higher risk of battery replacement require that these devices be designed and developed for minimum energy consumption.

In this research, we explore a variety of ultra low-power DSP techniques for wearable biomedical devices. A blend of feature engineering and machine learning algorithms are employed and evaluated within the context of real-time classification applications. The evaluation is based on two main criteria: 1. classification accuracy and 2. algorithmic complexity (computation, memory, latency). Currently, two case studies are being explored.

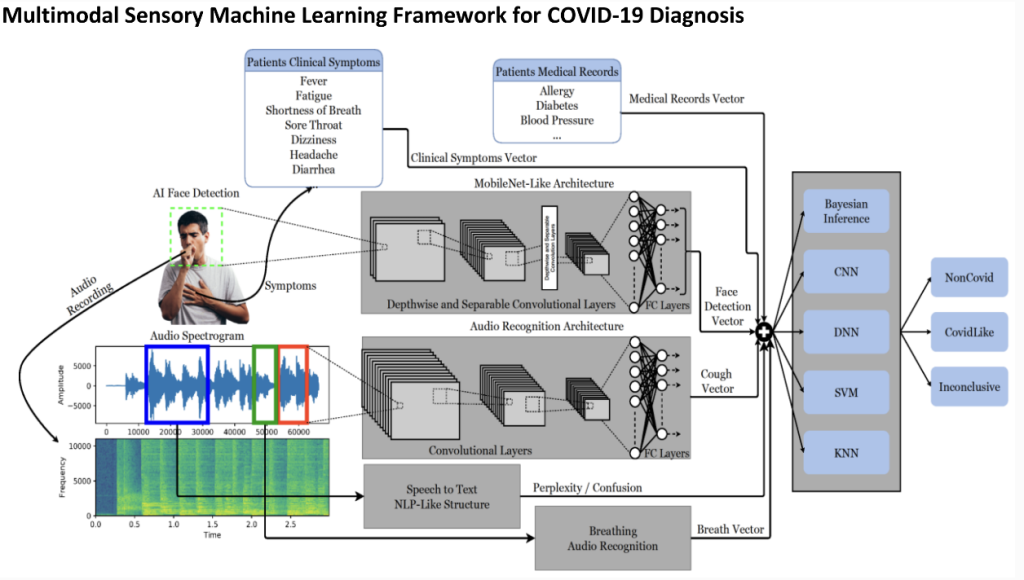

Multimodal Sensory Machine Learning Framework for COVID-19 Diagnosis

The rapid growth and contamination of the novel COVID-19 virus all around the globe has further enhanced the need for early stage diagnosis involving IoT and machine learning applications. Clinic independent machine assistance is critical in this regard for the initial detection of COVID-19 symptoms and to assess the severity of the infection. The objective of this ongoing research is to utilize machine learning models running on general computing processors to replace what doctors do at triage and telemedicine with the help of passively recorded audio/video and self-declared information. The architecture has a deep learning module that extracts symptomatic respiratory features from raw audio signals, a speech to text module that estimates the user’s confusion as another symptom, a deep convolutional neural network to extract demographic features and a questionnaire that takes self-declared data. All the extracted and inserted data are jointly given to a final ensemble of classifiers that summarizes a probability vector for diagnosis of the user’s infection with COVID. The framework is extremely flexible and scalable in the sense that it can incorporate new sensors data easily, allowing the system to be tailored to a variety of kinds of situations, such as in-home consultations, clinical visits, or even symptom detection in public spaces using non-contact sensors.

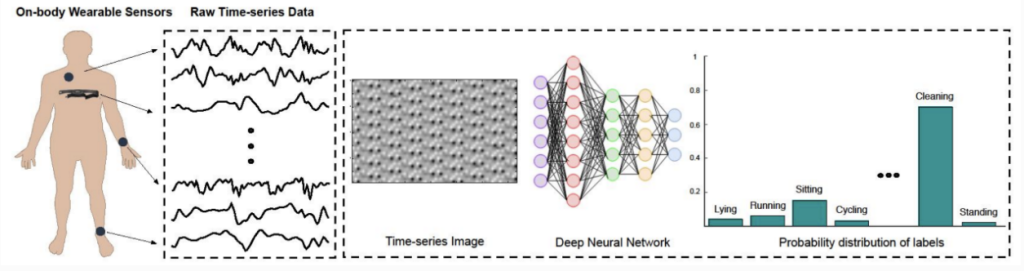

Low Power Deep Neural Network Architectures for Physical Activity Monitoring

Various applications developed around the world nowadays use movements, gestures, and postures of different human activities to map physiology-oriented tasks or to implement sensing devices for body actions. This necessitates prompt recognition of activities and in terms of embedded applications, low power designs are preferred more readily than their power-hungry counterparts. The physical activity case study under investigation to achieve this goal is implemented using deep neural networks (DNN). Architecturally, the DNNs can be deployed as standalone convolution or LSTM (Long Short-Term Memory) networks to extract relevant feature maps from raw time series input signals which are followed by fully connected layers to summarize the inference output. This inference output thus produced is in the form of probability distribution for SoftMax activation of fully connected layers.

An Energy Efficient and Flexible Multichannel Electroencephalogram (EEG) Artifact Detection

This project aims at an energy efficient and flexible multichannel Electroencephalogram (EEG) artifact detection and identification networks and their reconfigurable hardware implementations. EEG signals are recordings of the brain activities. The EEG recordings that do not originate from cerebral activities are termed as artifacts. Our proposed models do not need expert knowledge for feature extraction or pre-processing of EEG data and have very efficient architectures implementable on mobile devices. The proposed networks can be reconfigured for any number of EEG channel and artifact classes. Experiments were done with different deep learning models (i.e. CNN, Depthwise Separable CNN, LSTM, Conv-LSTM) with the goal of maximizing the detection/identification accuracy while minimizing the weight parameters and required number of operations.

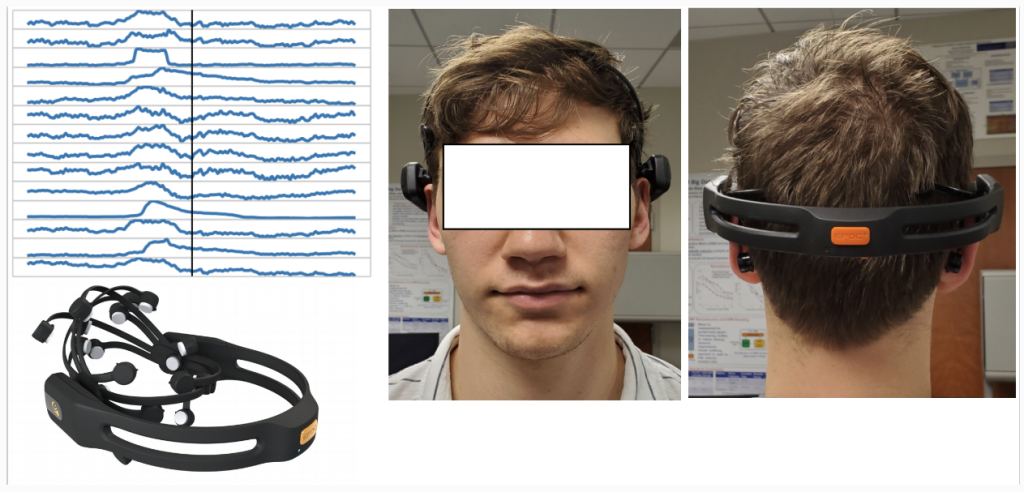

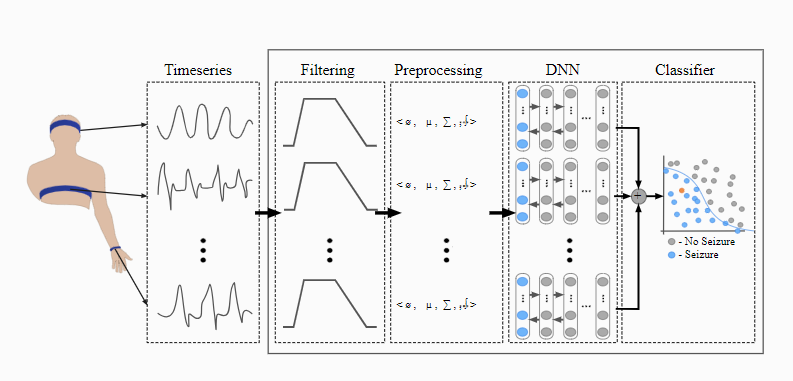

EEG-based Seizure Detection

This case study is the detection of seizures for epileptic patients using multi-physiological signals in an ambulatory setting. In a clinical setting, the de facto gold standard for the detection of seizures is through EEG+Video monitoring. In an ambulatory setting, video is less viable and EEG can only be done using limited electrodes with higher noise margins. This work looks at incorporating other physiological signals such as ECG, respiratory, and motion to boost classification performance in an ambulatory setting where noise is more prone. These multi-physiological signals will be fed into a deep neural network to perform both the multi-variate feature abstraction as well as seizure classification.

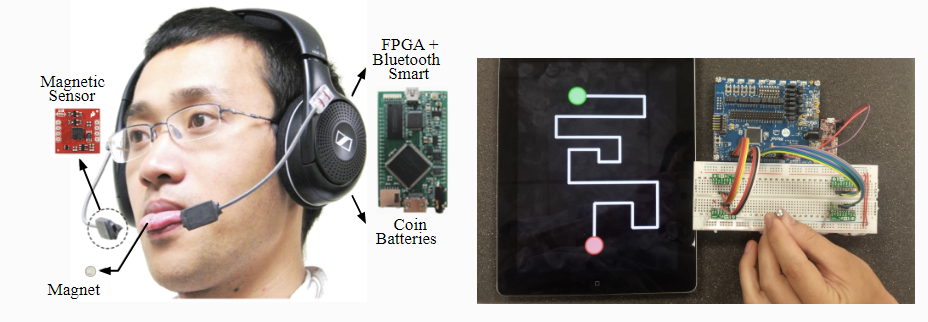

Tongue Drive System (TDS)

This case study is an assistive technology that enables a user to interactive with their surroundings using a tongue-driven interface. The Tongue Drive System (TDS) is a wearable device that allows for realtime tracking of the tongue motion in the oral space by sensing the changes in the magnetic field generated by a small magnetic marker attached to a users tongue. Four strategically placed 3D magnetometers are used to measure the magnetic field. Efficient DSP and machine learning algorithms are devised to remove noise and artifacts such as Earth’s magnetic field as well as to triangulate the location of the magnet locally on the sensor node.