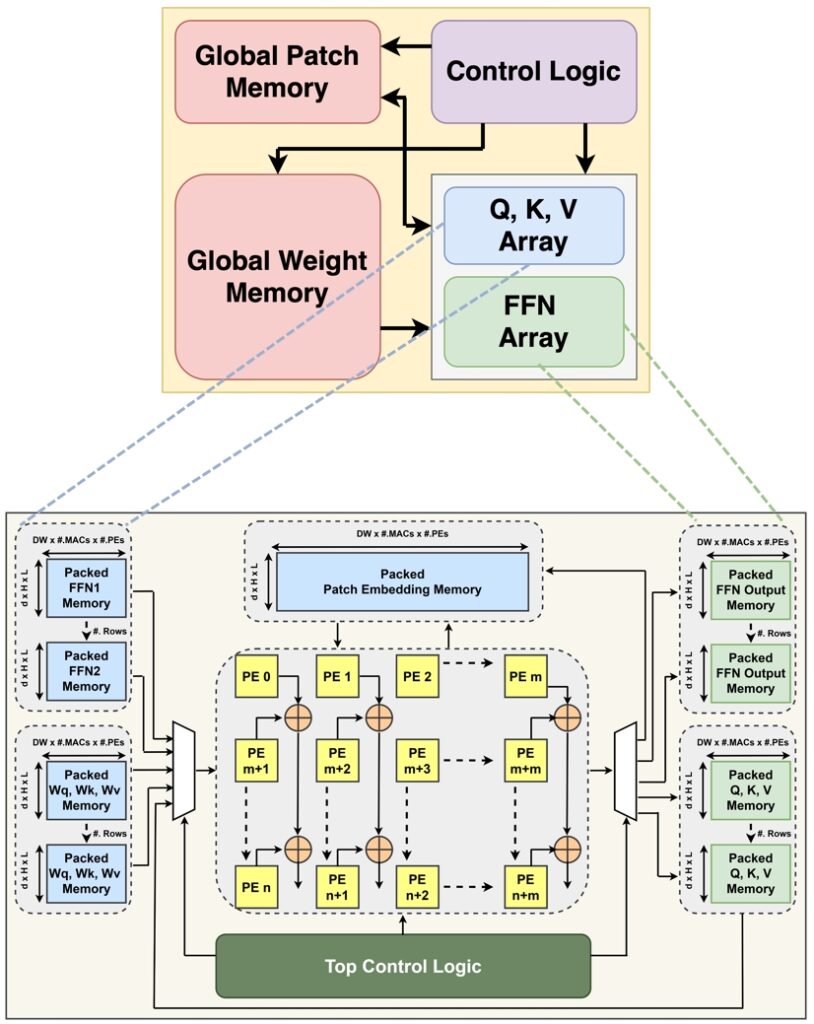

Regression based Hardware architecture Exploration for Vision Transformer Acceleration

Deep learning models, particularly Vision Transformers (ViTs), offer state-of-the-art performance in visual understanding but demand efficient deployment on constrained edge platforms. We present a regression-based hardware architecture exploration approach to accelerate ViTs using FPGAs. By modeling the computational characteristics of transformer components—such as attention and linear projection layers—we derive optimized configurations of processing elements (PEs) for different submodules. Through deterministic regression-based search, we identify quantized architectures (e.g., 8-bit DeiT) that significantly reduce model size and energy consumption without sacrificing accuracy. This framework enables low-latency, memory-efficient vision inference on resource-limited embedded systems, advancing deployable AI at the edge.

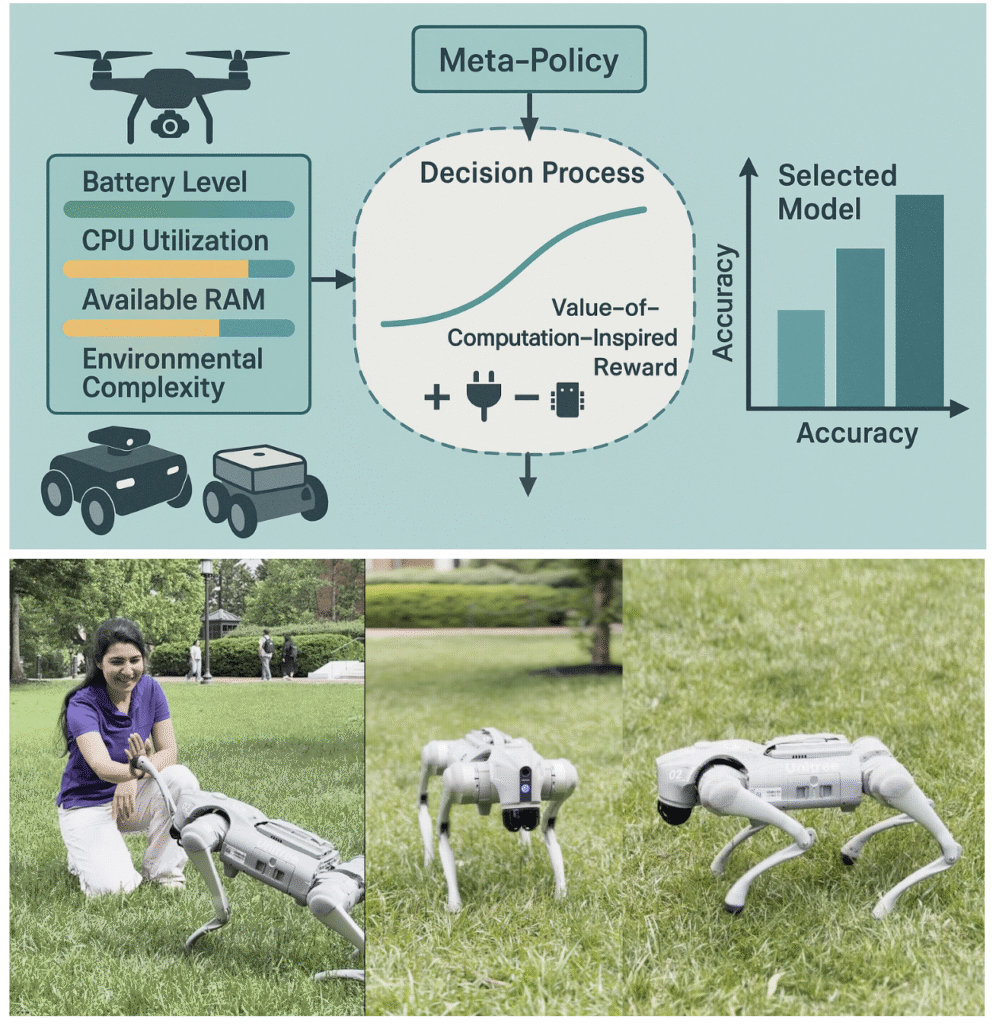

Metareasoning at the Edge: Enabling Intelligent Dynamic Model Switching for Embedded Systems

Modern embedded autonomous systems increasingly integrate deep learning techniques for perception and control. However, such devices face onboard processing challenges due to tight power budgets, low thermal design power (TDP), and limited memory bandwidth, commonly known as the “memory wall”. These constraints significantly limit the ability to run large models continuously or perform complex inference tasks onboard. While relying on a single, high-capacity model may improve accuracy, it often drains energy resources and strains memory. To address these limitations, we propose a metareasoning-based dynamic model-switching framework that uses a lightweight meta-policy to select the most efficient model, balancing accuracy with energy and memory constraints on edge devices. To evaluate the proposed framework, we deploy the metareasoning strategy on two real-world platforms: a nano drone equipped with a GAP8 processor and a Jetson Orin Nano–powered Yahboom Rosmaster Wheel robot..

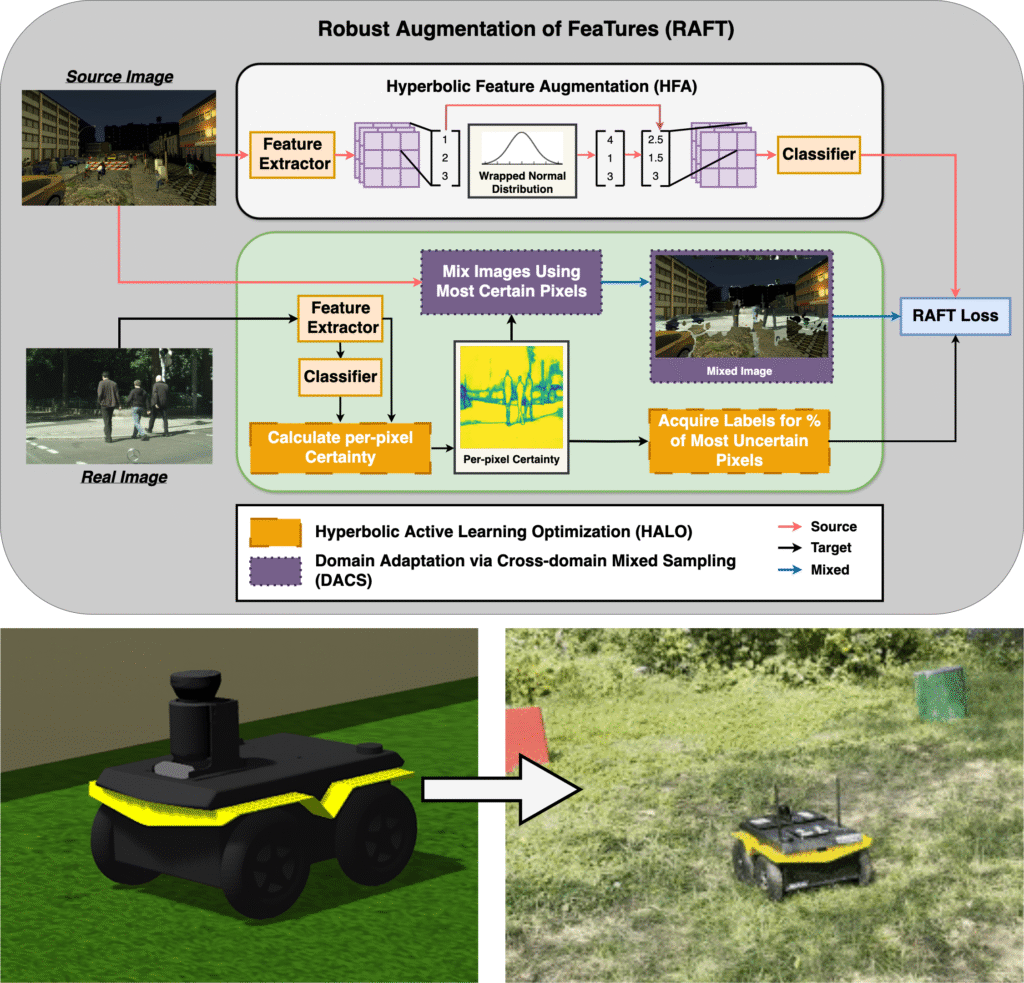

Synthetic-to-Real Transfer of Image Segmentation Models for Autonomous Navigation

Image segmentation enables scene understanding on edge and robotics platforms. However, in order to train state-of-the-art image segmentation neural networks, a large amount of training data is required. While synthetic data offers a potential solution, neural networks trained on synthetic data exhibit substantial degradation when deployed to the real-world. Therefore, we develop a framework we call Robust Augmentation of FeaTures (RAFT) which combines active learning, self-supervised learning, and augmentations, in order to reduce the amount of real-world annotations required for successful synthetic-to-real domain adaptation. With this framework, we achieve state-of-the-art results on the SYNTHIA to Cityscapes and GTAV to Cityscapes synthetic-to-real benchmarks.

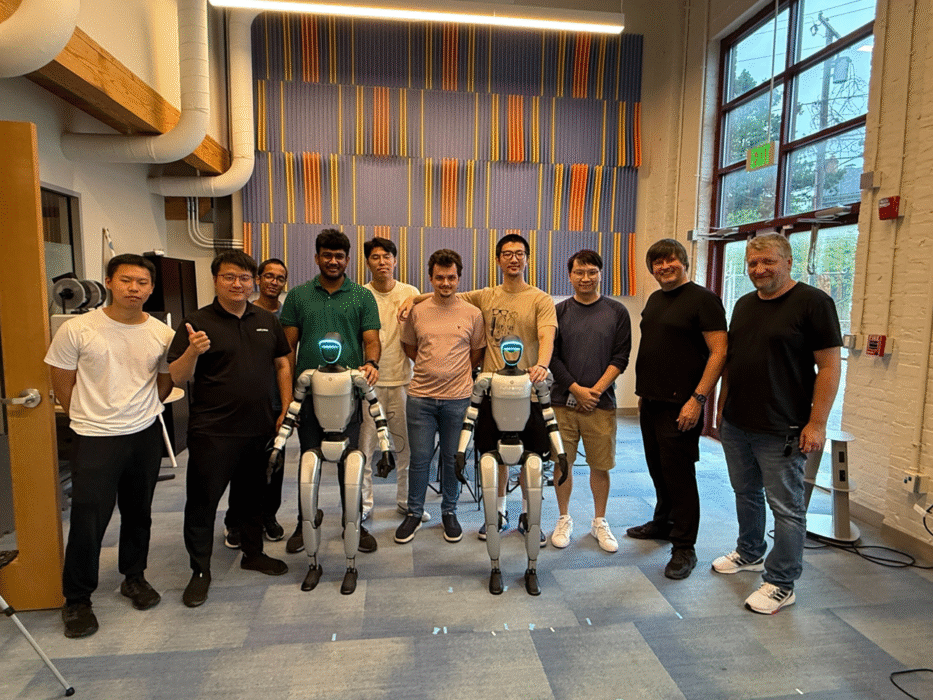

Autonomous Navigation for Legged Robots in Complex Environments

Legged robot navigation demands tight coordination between high-level planning and low-level locomotion control. We present a hierarchical framework for autonomous quadruped navigation that integrates real-time perception, path planning, and adaptive motor control. Starting with robust 2D navigation that accounts for gait patterns and kinodynamic constraints, the system scales to terrain-adaptive 3D traversal in unstructured environments. Using model predictive control (MPC) and learning-based policies, the robot generates feasible trajectories while maintaining the high-frequency control needed for stable motion. Multi-modal sensing and gait adaptation further enhance versatility across indoor and outdoor scenarios. This work advances reliable, autonomous mobility for legged platforms in real-world settings.

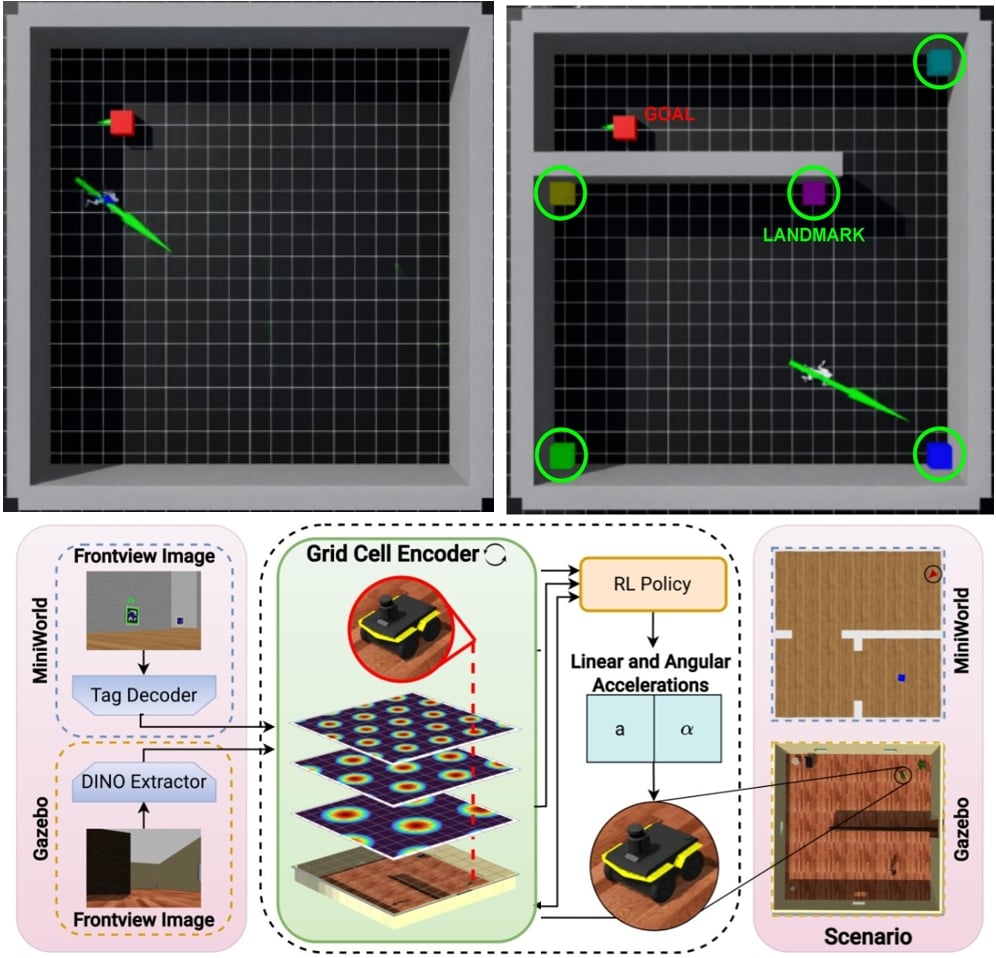

Neuro-Inspired Hierarchical Navigation for Legged Robots

Navigating complex environments requires both high-level spatial reasoning and low-level motor control—especially for legged robots operating in dynamic, unfamiliar settings. We explore a hybrid approach that integrates neuroscience-inspired spatial encoding with bilevel reinforcement learning for robust, map-free navigation. Drawing from entorhinal cortex models like EDEN, our system encodes egocentric observations into generalizable grid-cell-like representations, enabling memory-efficient goal pursuit. A high-level policy interprets these cues to generate velocity commands, while a low-level controller translates them into 12-DoF torque actions for quadruped locomotion. Trained in photorealistic Matterport3D environments using Isaac Sim, this architecture scales to real-world deployment on Unitree Go2, demonstrating adaptive and efficient mobility across diverse terrain. Our results highlight the power of combining brain-inspired perception with modular RL control for embodied agents.

Adaptive Medical Assistive Robots for Trauma Response

Robotic systems must operate reliably in unstructured, high-risk environments to support urgent medical needs. We present an autonomous quadruped-based medical assistant capable of performing critical trauma care tasks in the field. Using depth sensing and neural inference, the robot identifies key anatomical landmarks and executes optimized ultrasound scans to detect the femoral artery and its bifurcation—vital for hemorrhage control. A segmentation model reconstructs vascular structures in 3D to guide intervention. This platform lays the groundwork for fully autonomous, multi-robot medical response systems, enabling robust, mobile trauma care in disaster or combat scenarios.

Looking ahead, our platform serves as a foundation for broader autonomous medical support—enabling collaborative exploration, patient localization, and coordinated intervention across multi-robot systems. This work combines embodied AI, medical imaging, and real-time planning to support adaptive, field-deployable healthcare. The resulting datasets, algorithms, and modular architecture provide a pathway toward responsive, context-aware robotic assistance in real-world emergency scenarios.

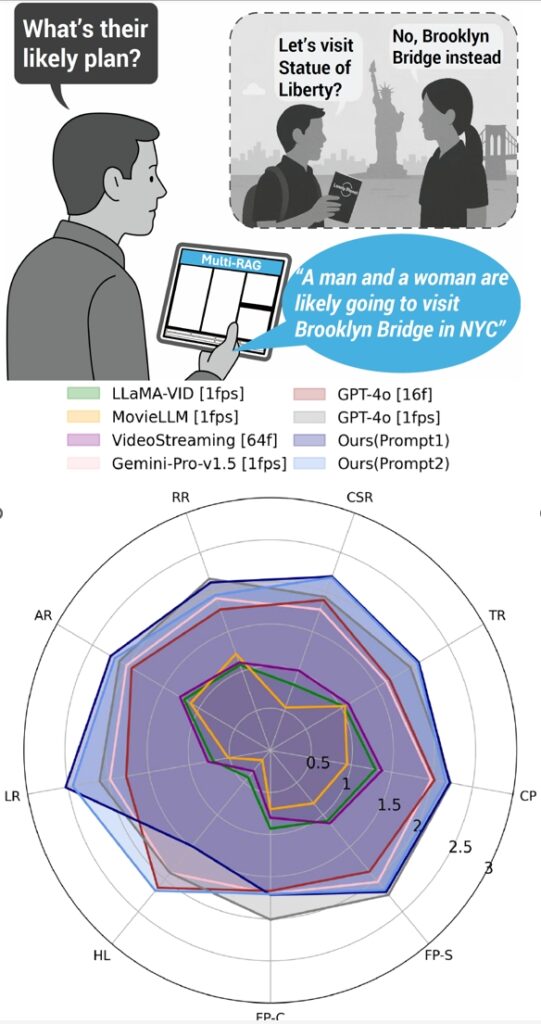

Multimodal Retrieval Augmented Generation (Multi-RAG)

Effective engagement in human society requires adapting, filtering information, and informed decision-making in dynamic situations. With robots increasingly integrated into human environments, there’s a growing need to offload cognitive tasks to these systems, especially in complex, information-rich scenarios. We introduce Multi-RAG, a multimodal retrieval-augmented generation (RAG) system designed to assist humans by integrating and reasoning across video, audio, and text information streams. Multi-RAG aims to enhance situational understanding and reduce human cognitive load, paving the way for adaptive robotic assistance. Evaluated on the challenging video understanding benchmark, Multi-RAG outperforms existing open-source video and vision-language models while requiring fewer resources and less data. Our results highlight Multi-RAG’s potential as an efficient foundation for adaptive human-robot collaboration in dynamic real-world contexts.

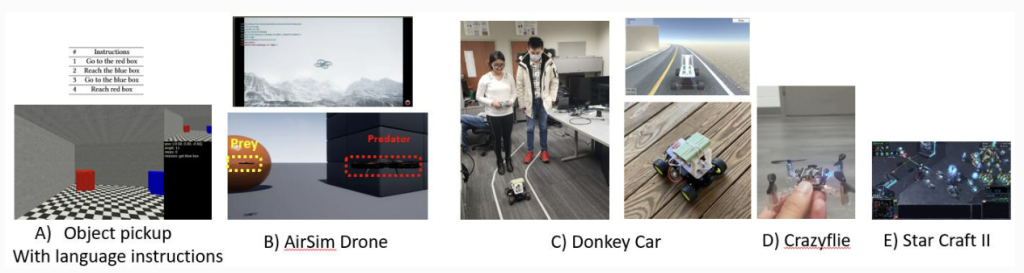

Energy Efficient Autonomous Systems and Robotics

Autonomous systems and robotics technology continue to have a major role in enabling future smart cities, transportation, energy management, logistics, smart sensing, home health care and defense technologies. Machine Learning and Reinforcement learning techniques have shown great promise in the past few years, getting us a few steps closer to deploying them in the real world. However, there are still many challenges before we can safely and efficiently deploy them in the real world, especially when these technologies work closely with other humans. Some of their issues are robustness, understanding and reasoning, adaptability, and awareness. Also, when it comes to robotics and autonomous systems, real time reaction to the environment changes is very critical. Current processors to run the algorithms for autonomous navigation are still very energy consuming. My funded research projects address these challenges by bridging the field of robotics and hardware design.

Wearable Multi-Physiological Machine Learning for IoT Health

Wireless medical technologies have created opportunities for new methods of preventive care using biomedical implanted and body-worn devices. The design of the technologies that will enable these applications requires correct delivery of the vital physiological signs of the patient along with the energy management in power-constrained devices. The high cost and even higher risk of battery replacement require that these devices be designed and developed for minimum energy consumption.

In this research, we explore a variety of ultra low-power DSP techniques for wearable biomedical devices. A blend of feature engineering and machine learning algorithms are employed and evaluated within the context of real-time classification applications. The evaluation is based on two major criteria: 1. classification accuracy and 2. algorithmic complexity (computation, memory, latency). Currently, two case studies are being explored. The first case study is the detection of seizures for epileptic patients using multi-physiological signals in an ambulatory setting. The second case study is an assistive technology that enables a user to interactive with their surroundings using a tongue-driven interface.

Tiny Scalable Deep Neural Network Accelerators

We explore the use of deep neural networks (DNN) for embedded big data applications. Deep neural networks have been demonstrated to outperform state-of-the-art solutions for a variety of complex classification tasks, such as image recognition. The ability to train networks to both perform feature abstraction and classification provides a number of key benefits. One key benefit is that it reduces the burden of the developer to produce efficient, optimal feature engineering, which typically requires expert domain-knowledge and significant time. A second key benefit is that the network’s complexity can be adjusted to achieve desired accuracy performance. Despite these benefits, DNNs have yet to be fully realized in an embedded setting. In this research, we explore novel architecture optimizations and develop optimal static mappings for neural networks onto highly parallel, highly granular hardware processors such as CPUs, many-cores, embedded GPUs, FPGAs, and ASIC.

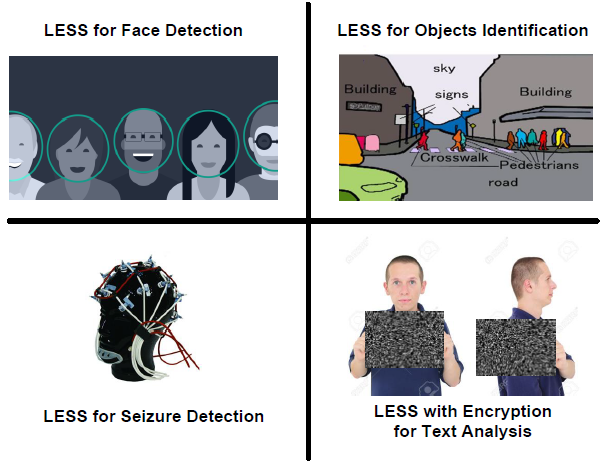

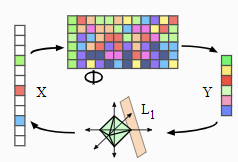

LESS: Light Encryption using Scalable Sketching

LESS framework is adopted to accelerate Big Data processing on Hardware Platforms. LESS can achieve reduction in data communications up to 67%, while achieving 3.81dB Signal to Reconstruction Error Rate. LESS framework has been applied to Face Detection, Objects and Scenes identification, Text analysis and Bio-medical applications. Integration of LESS framework with Hadoop MapReduce Platform shows that, it achieves 46% reduction in data transfers with very low execution overhead of 0.11% and negligible energy overhead of 0.001% when tested for 50,000 number of image.

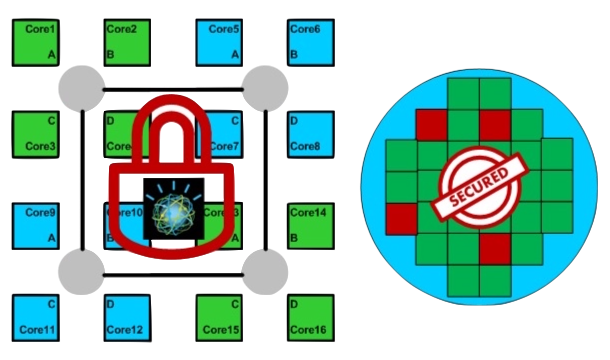

Energy Efficient Secured Many-Core Processor for Embedded Applications

The goal of this research is to design a real-time Trojan detection framework for a custom Many-Core by using an effective method such that it has minimal hardware overhead and implementation complexity with a very high accuracy of detection.

Efficient Compressive Sensing Reconstruction Algorithms and Architectures

Compressive sensing (CS) is a novel technology which allows sampling sparse signals under sub-Nyquist rate and reconstructing the image using computational intensive algorithms. Reconstruction algorithms are complex and software implementation of these algorithms is extremely slow and power consuming. In this project, we are proposing reduced complexity reconstruction algorithms and efficient hardware implementation on different platforms including FPGA, ASIC, GPU, and many-core platforms.